Click Farm Fraud in PPC Advertising: Exposing the Trillion-Dollar Threat and Google's Defense Infrastructure

Executive Summary Click farm fraud represents one of the largest, most sophisticated threats to digital advertising ROI, draining an estimated $104 billion annually from advertiser budgets worldwide as of 2025, with projections exceeding $142 billion by 2027[1]. While click farms...

Executive Summary

Click farm fraud represents one of the largest, most sophisticated threats to digital advertising ROI, draining an estimated $104 billion annually from advertiser budgets worldwide as of 2025, with projections exceeding $142 billion by 2027[1]. While click farms have evolved from simple, easily-detected operations into highly coordinated, AI-powered fraud networks, the fundamental deception remains consistent: scammers deploy human workers, bots, malware-infected devices, and increasingly, machine learning systems to generate fraudulent clicks that produce zero consumer value yet trigger full advertiser payment.

This report exposes the technical sophistication of modern click farm operations, details how fraudsters systematically evade Google's detection mechanisms, and examines Google's multi-layered defense infrastructure—including over 200 automated filters, large language models, behavioral biometrics, and manual review teams. Critically, research indicates Google detects only 40-60% of fraudulent clicks, leaving $35 billion in undetected fraud flowing through Google's platforms annually[2]. The arms race between fraud innovation and detection capabilities continues to intensify, with fraudsters now employing AI adaptive tactics that adjust their methods in real-time when detection systems are encountered.

Key Finding: Despite Google's sophisticated 200+ filter infrastructure and AI-powered detection systems, approximately 40-60% of fraudulent clicks escape detection, translating to $35 billion in undetected annual fraud losses.

The Scale of the Problem: Financial Impact and Industry Dynamics

Click fraud imposes quantifiable, escalating economic damage across the digital advertising ecosystem. Annual fraud costs reached $104 billion in 2025—an 18% increase from $88 billion in 2024—with projections suggesting $142 billion in losses by 2027[6]. This exponential growth reflects two concurrent trends: expanding global ad spend (projected at $1.2 trillion worldwide in 2026) and fraudsters' improving operational sophistication[7].

The fraud rate itself remains stubbornly consistent at 14-22% of all paid clicks across platforms, meaning roughly one in every five to seven clicks may be fraudulent[8]. Industries with high customer acquisition costs suffer disproportionately. Finance and legal services face fraud inflation of 35% with virtually no conversion, while mobile app marketing and programmatic display networks experience invalidation rates ranging from 20-40%[9]. Affiliate marketing channels report 25% of generated leads as fraudulent, costing advertisers an estimated $3.4 billion specifically in affiliate fraud losses[10].

The economic irony is profound: despite the massive resources invested in fraud detection—Google's Ad Traffic Quality Team alone employs PhDs, data scientists, and researchers globally—independent analysis suggests advertiser budgets continue hemorrhaging money through fraud that detection systems cannot identify or prevent retroactively. Google acknowledges issuing automatic credits for invalid activity detected after billing occurs, yet the damage to campaign optimization is already done. During the lag time between fraud occurrence and detection (sometimes weeks or months), advertisers have made budget allocation decisions based on fraud-corrupted data, initiated unprofitable scaling of compromised campaigns, and mis-attributed conversions to channels poisoned with invalid traffic[11].

Chart: Global Click Fraud Financial Impact and Fraud Rate Trend (2024-2027)

A dual-axis visualization displaying the escalating financial costs of click fraud from $88 billion in 2024 to a projected $142 billion by 2027, alongside the persistent fraud rate ranging from 14-22% of all paid clicks. This data demonstrates how despite growing industry awareness, the absolute dollar losses continue to expand even as a percentage of spend.

Note: Include the chart image here in your website implementation

Click Farm Operations: Business Model and Operational Structure

The Economics of Human-Driven Fraud

Click farms represent the human-intensive branch of click fraud, operating through a straightforward but scalable business model. Large operations employ workers—typically in low-wage countries—to manually perform fraudulent actions across multiple accounts and devices. The pricing structure is commodity-like: $5 per 1,000 likes, $20 per 1,000 ad clicks, $50 for app installs with reviews[12]. Workers operate on piece-rate compensation, earning pennies per task, with supervisors monitoring quotas and verifying completed work. Some sophisticated operations employ management software coordinating tasks across hundreds of workers and devices simultaneously.

What makes click farms particularly dangerous is their inherent advantage: they use real human beings on real devices with genuine accounts and legitimate browsing histories. Unlike simple automated bots, click farm workers manually solve CAPTCHAs, exhibit natural mouse movement patterns, and generate user agent strings indistinguishable from legitimate traffic. This human element bypasses the entire category of bot detection systems that rely on CAPTCHA as a verification layer[13]. They can manually evaluate a landing page, recognize when fraud detection appears, and deliberately slow their click patterns to avoid statistical anomalies that trigger alarms.

Click farm operations have industrialized significantly. Workers typically operate during defined shifts aligned with the fraud operation's timezone rather than the advertiser's target market—a pattern that, when detected, reveals coordinated fraud. Device sharing among shift-based workers creates fingerprinting patterns where multiple ostensibly different users show identical device characteristics (same GPU configuration, installed fonts, screen resolution) because they're literally operating the same physical device throughout the day. Supervisory oversight ensures consistent execution and prevents workers from accidentally clicking the "convert" button or behaving too naturally, which would reduce fraud effectiveness[14].

Monetization Vectors

Click farms target three primary revenue streams: publisher earnings manipulation, competitor budget draining, and app installation fraud. In publisher earnings scenarios, click farm networks coordinate with website owners hosting Google AdSense or similar programs. The website owner drives click farm traffic to their own displayed ads, artificially inflating revenue without serving legitimate audience value. This directly violates Google policy but persists due to the detection lag. Competitor budget attacks function similarly but deliberately—fraudsters purchase click farm services targeting a competitor's ads, intending to exhaust their daily budget so their own ads appear more prominently. Mobile app fraudsters use click farms to generate fake app installs with positive reviews, manipulating app store rankings and attribution systems. Some advanced operations combine human workers with automated systems, where humans establish initial browsing patterns and legitimate-appearing sessions, then hand off to bots that maintain those exact behavioral signatures while scaling the operation[15].

How Fraudsters Evade Detection: Technical Methods and Evasion Tactics

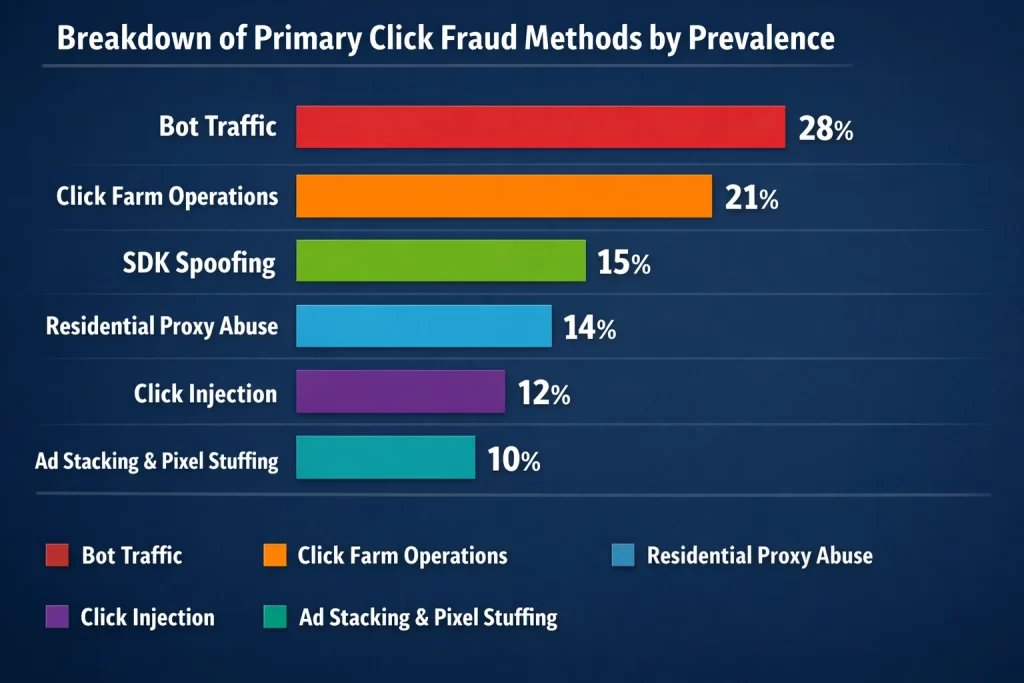

Chart: Breakdown of Primary Click Fraud Methods by Prevalence

A horizontal bar chart illustrating the distribution of click fraud tactics currently deployed by scammers. Bot-driven traffic and click farm operations account for nearly half of all fraud, while emerging techniques like SDK spoofing and residential proxy abuse represent significant and growing threats in the mobile advertising and detection-evasion space.

Note: Include the chart image here in your website implementation

IP Address and Geolocation Spoofing

The most fundamental detection strategy is IP-based blocking—flagging traffic from known data centers, VPN providers, or previously identified fraud sources. Fraudsters counter this through multiple sophistication layers:

Residential Proxies

Residential proxies represent the current frontier of IP evasion. Unlike data center proxies that originate from identifiable hosting providers, residential proxies route traffic through actual household IP addresses registered to legitimate residential internet subscribers[16]. Ad platforms easily detect and block data center proxy ranges, making them mostly ineffective for sophisticated operations. Residential proxies, conversely, appear identical to traffic from real users in real homes. Detection systems struggle because residential IPs are genuinely legitimate—they belong to real people who aren't fraudsters. Fraudsters rent access to these residential IP pools, often with high-frequency rotation capabilities. Each click can appear to originate from a different geographic location, defeating per-IP rate limiting and simple geographic verification systems. Geographic manipulation techniques use proxies to present clicks from high-value regions (where CPCs are maximized) or from users' home cities (to appear locally relevant), inflating both the apparent value and geographic relevance of fraudulent traffic[17].

IP Rotation and Proxy Chains

IP rotation and proxy chains employ multiple layers of obfuscation. Advanced fraud operations chain proxies sequentially—each proxy passes traffic through another proxy before reaching the ad network—making origin attribution nearly impossible. Rotating proxy services automatically change IP addresses between clicks or even within sessions, ensuring no single IP receives enough traffic volume to trigger detection thresholds. This defeats both static blocklists and statistical anomaly detection that flags single IPs generating too many clicks in short time periods[18].

Device and Browser Fingerprinting Evasion

Modern ad fraud detection relies heavily on device fingerprinting—collecting hardware, software, and browser characteristics to create unique device identifiers. Sophisticated bots and click farms systematically defeat these techniques:

Browser and User Agent Rotation

Browser and user agent rotation involve switching between different browser types, versions, and operating systems. Rather than every click appearing to come from Chrome 120 on Windows 11, fraudsters rotate through diverse browser configurations to appear as diverse traffic from different users. User agent strings are spoofed to reflect realistic combinations[19].

Canvas Fingerprinting Evasion

Canvas fingerprinting evasion targets one of the most reliable fingerprinting techniques. Canvas fingerprinting works by rendering hidden graphics on a webpage and analyzing the pixel data—rendering differences across devices create unique fingerprints due to hardware differences, driver versions, and GPU configurations. Advanced bots employ anti-fingerprinting techniques, obfuscation methods, and custom TLS fingerprinting to avoid revealing consistent device signatures[20]. Some fraudsters use headless browsers and specialized anti-detection browsers designed specifically to defeat fingerprinting, with one 2025 analysis finding advanced bots evading detection in 93% of cases when anti-fingerprinting techniques were employed[21].

Device Fingerprint Rotation

Device fingerprint rotation involves manually or automatically switching device characteristics between clicks. A fraudster operating a click farm might use one physical device configured with multiple virtual machine instances, each with unique browser profiles, installed fonts, screen resolutions, and timezone settings. Between clicks, they switch VMs, presenting entirely different fingerprints to detection systems. When detection systems observe this diversity, it initially appears as legitimate traffic from multiple devices—the intended deception[22].

Behavioral Manipulation and Human Mimicry

The most sophisticated fraud detection systems now analyze behavioral signals rather than just device characteristics. Fraudsters have adapted:

Mouse Movement and Interaction Simulation

Mouse movement and interaction simulation involve automated systems replicating natural cursor movement—curved paths with realistic acceleration and deceleration rather than instant jumps, variable clicking speeds, scrolling behaviors, and hesitation patterns when reading content. Advanced bots simulate specific behaviors like adding items to shopping carts, deleting them, filling form fields partially, abandoning them, then scrolling before clicking ads[23]. Timing variation is implemented to avoid the telltale signature of perfectly-spaced clicks that indicate automation—fraudsters introduce random delays, creating click patterns indistinguishable from human browsing rhythm.

Session Depth Variation

Session depth variation mimics legitimate user journey depth. Rather than clicking an ad and immediately bouncing, sophisticated fraud operations visit multiple pages, spend varying durations on each, trigger scroll events, and potentially interact with page elements before completing the fraudulent action. This depth makes the activity appear genuinely interested[24].

Behavioral Pattern Establishment

Behavioral pattern establishment describes the most resource-intensive evasion technique. Some click farms establish legitimate browsing history on accounts before conducting fraud. Workers spend time normally browsing the internet, watching videos, making occasional legitimate purchases, and building browsing history indistinguishable from real users. Only after establishing baseline behavior does the fraudulent activity commence, making the fraud appear as a natural extension of legitimate account behavior rather than aberration[25].

AI-Powered Behavioral Replication

AI-powered behavioral replication represents the 2025-2026 frontier. Machine learning systems can analyze millions of real user interactions, extract behavioral patterns, and replicate them automatically. AI systems learn the distribution of click timings, mouse speeds, page engagement durations, and conversion behaviors from legitimate users, then generate synthetic traffic following these learned patterns. This AI-generated traffic approaches legitimate user behavior so closely that traditional statistical anomaly detection struggles to distinguish them[26].

Mobile-Specific Fraud Techniques

Mobile advertising introduces distinct fraud vectors not present in desktop environments:

SDK Spoofing

SDK spoofing involves manipulating software development kits used to build mobile apps. Fraudsters insert malicious code into SDKs, which developers unknowingly download and incorporate into their apps. Once deployed, the infected SDK generates fake install events, click events, and engagement signals without actual user interaction. DrainerBot, one infamous SDK spoofing malware, was distributed through compromised SDKs and downloaded over 10 million times, silently generating video ad views while consuming gigabytes of device data and battery life[27]. SourMint conducted one of the largest SDK spoofing operations on iOS by infiltrating the Mintegral SDK provider. Because SDK fraud generates signals directly within the attribution infrastructure, detection systems struggle to differentiate false signals from genuine events—the malware is creating authentic-looking API calls that appear identical to legitimate install attribution[28].

Click Injection

Click injection exploits the mobile app installation process. Android devices running malicious apps detect when users install applications and inject fraudulent attribution clicks at the last possible moment before installation completes. Attribution systems, measuring click-to-install conversion windows, often grant credit to the last detected click, allowing fraudsters to claim credit for organic user installs they didn't drive[29]. Click flooding represents a related technique where fraudsters generate massive volumes of clicks on install ads, statistically hoping some will fall within attribution windows when users coincidentally install the same app organically[30].

Install Hijacking

Install hijacking involves intercepting the installation process itself, inserting fraudulent attribution data to claim credit for installs. This sophisticated technique exploits vulnerabilities in mobile attribution tracking infrastructure[31].

Display Network and Programmatic Fraud

Display network fraud leverages the vast scale of the Google Display Network (millions of publishers) and programmatic buying automation:

Pixel Stuffing

Pixel stuffing renders advertisements in 1×1 pixel areas—invisible to users but still counted as impressions by ad systems. Fraudsters can stack dozens or even hundreds of ads in these invisible 1×1 pixel placements, with each ad counted toward billing while users see nothing. This technique exploits the fact that ad servers count impressions based on ad loading, not visibility or user awareness. Advertisers pay for impressions that never reached human eyes[32].

Ad Stacking

Ad stacking places multiple advertisements in the same physical location, layered atop each other. Users see only the top ad, yet billing systems count impressions for all ads in the stack—often 3-5 simultaneous ads. A user clicking one car advertisement might trigger billing for car ads, insurance ads, financial service ads, and mobile app ads—all stacked in the same space[33].

Domain Spoofing

Domain spoofing involves fraudsters creating fake websites that visually mimic legitimate, high-value publishers. Advertisers believe they're buying placements on reputable news sites or trusted platforms but actually receive ads served on fraudulent sites with spoofed domain names. Programmatic buying, operating at millisecond speed, often fails to verify domain legitimacy before purchasing inventory[34].

Cookie Stuffing

Cookie stuffing represents attribution fraud in the affiliate channel. Fraudsters insert third-party cookies onto user devices without knowledge or consent. When users subsequently make purchases, these injected cookies—not the legitimate affiliate referral—claim credit for the conversion. Affiliates lose earned commissions while fraudsters receive undeserved payments. This technique exploits weak cookie validation in affiliate networks and browser vulnerabilities (cross-site scripting, malicious pop-ups)[35].

Cross-Channel and Hybrid Fraud Approaches

The most sophisticated 2025-2026 fraud techniques operate across multiple platforms simultaneously:

Cross-Channel Fraud

Cross-channel fraud sequences fraudulent actions across different platforms (Google Ads, Meta/Facebook, TikTok) in ways that "wash" the fraud signal. A fraudster might click a Google Ad, trigger a Meta pixel conversion through spoofing, then claim conversion attribution across both platforms simultaneously. The fraud signal appears distributed across multiple platforms rather than concentrated in one place, making platform-specific detection systems unable to attribute the fraud source. This invisible budget drain occurs across entire omnichannel advertising stacks[36].

Hybrid Human-Bot Operations

Hybrid human-bot operations combine human workers establishing behavioral patterns with automated systems maintaining those patterns at scale. Humans build initial browsing history and behavioral profiles on accounts, then hand off to bots that replicate exact behavioral signatures while scaling fraudulent activity. This hybrid approach defeats systems that detect behavior changes or deviations from established patterns—the patterns were established fraudulently from inception[37].

Malware-Infected Device Networks

Malware-infected device networks represent the most insidious fraud vector. Legitimate devices infected with malware become unwitting participants in click fraud. The device owner browses normally, unaware their device conducts fraudulent clicks in the background or during idle periods. These botnets contain thousands or millions of compromised devices (computers, smartphones, IoT devices), with command-and-control infrastructure instructing bots to generate coordinated clicks. Fraudsters use real user devices with legitimate histories, making detection nearly impossible—these aren't fake devices or suspicious IPs, but actual consumer devices with real usage patterns interrupted by injected fraudulent traffic[38].

Google's Detection and Protection Infrastructure

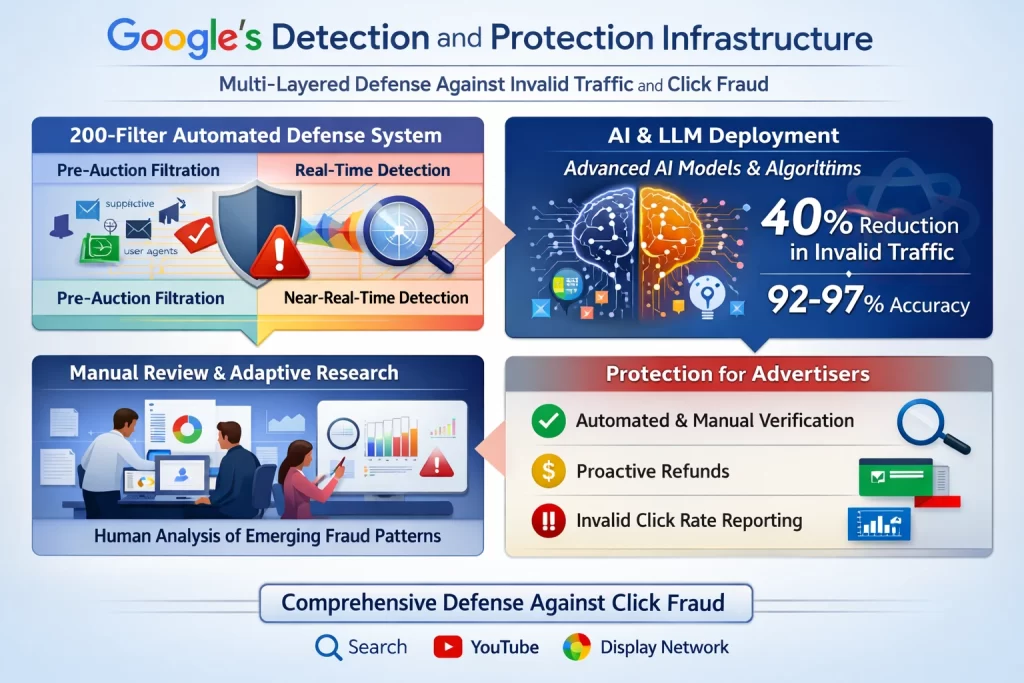

Google's defense against click fraud involves multiple defensive layers operating simultaneously across real-time and post-incident timeframes:

The 200-Filter Automated Defense System

Google employs over 200 sophisticated filters continuously monitoring ad serving, operating across three temporal phases[39]:

Pre-Auction Filtration

Pre-auction filtration prevents invalid traffic before ads even serve or budgets are charged. Known bad actors—denylisted user agents and IP addresses, publishers with suspiciously high clickthrough rates, single users generating anomalous patterns—are blocked before auction participation. This prevents fraudsters from ever reaching advertiser budgets[40].

Real-Time Detection

Real-time detection analyzes in-flight traffic as it occurs. Google's systems examine IP addresses, user agents, click timing patterns, device fingerprints, behavioral signals, and interaction quality to identify anomalies suggesting fraud. Traffic flagged in real-time is blocked or discarded before reaching advertiser accounts[41].

Near-Real-Time Detection

Near-real-time detection analyzes traffic patterns over hours to weeks. Some fraud patterns emerge only through temporal analysis—consistent behavioral signatures observed across time periods, geographic inconsistencies emerging from aggregated data, or subtle engagement patterns indicating non-human activity. These detections typically trigger credits issued to advertisers even if the traffic initially charged to their accounts[42].

AI and Large Language Model Deployment

Google's 2025-2026 defensive evolution introduced large language models and advanced AI specifically trained on advertising fraud patterns[43]:

Google's Ad Traffic Quality Team, in collaboration with Google Research and Google DeepMind, deployed LLM-powered applications that analyze multiple signals simultaneously—app and web content characteristics, ad placement context, user interaction patterns, and behavioral signals indicative of invalid traffic. These AI systems achieved a 40% reduction in invalid traffic from deceptive or disruptive ad-serving practices[44]. The language models understand contextual indicators of fraud that rule-based systems miss—for example, distinguishing between legitimate adult content sites (where high clickthrough rates are normal) and fraudulent impression farms disguised as adult content.

Machine learning algorithms analyze hundreds of data signals per click to differentiate invalid from legitimate traffic. These models are trained on massive datasets of known fraudulent and legitimate interactions, learning to identify complex patterns. The systems employ ensemble methods—combining random forests for real-time classification, neural networks for complex pattern recognition, and gradient boosting algorithms for maximum accuracy. Advanced implementations achieve 92-97% accuracy rates, substantially exceeding the 60-75% accuracy of traditional rule-based detection[45].

Manual Review and Adaptive Research

Google maintains dedicated manual review teams that analyze flagged suspicious traffic. These teams separate non-human traffic from normal activity, identify fraud operation signatures, and develop new filters based on emerging techniques. This human element remains critical because fraudsters continuously innovate, requiring adaptive human judgment to recognize novel fraud patterns that automated systems haven't encountered[46].

The research component involves deep investigation into fraud sources, distribution mechanisms, and operational infrastructure. When new fraud techniques emerge, Google Research investigates the underlying technical methods and develops corresponding countermeasures, feeding findings back into the automated filter system[47].

Protection Mechanisms for Advertisers

Google's stated protections include[48][49][50][51]:

- Automated and Manual Verification: Extensive automated checks supplemented by manual review ensure advertisers aren't charged for invalid traffic.

- Proactive Refunds: When invalid traffic is detected after billing, Google automatically issues credits appearing on subsequent invoices.

- Invalid Click Rate Reporting: Advertisers can monitor invalid click percentages in their Google Ads accounts, though detailed forensic data remains unavailable.

- In-Built Filters: Automated filters monitor unusual click-through rates, duplicate IP addresses, and non-human behavior patterns across the Display Network, YouTube, and Search.

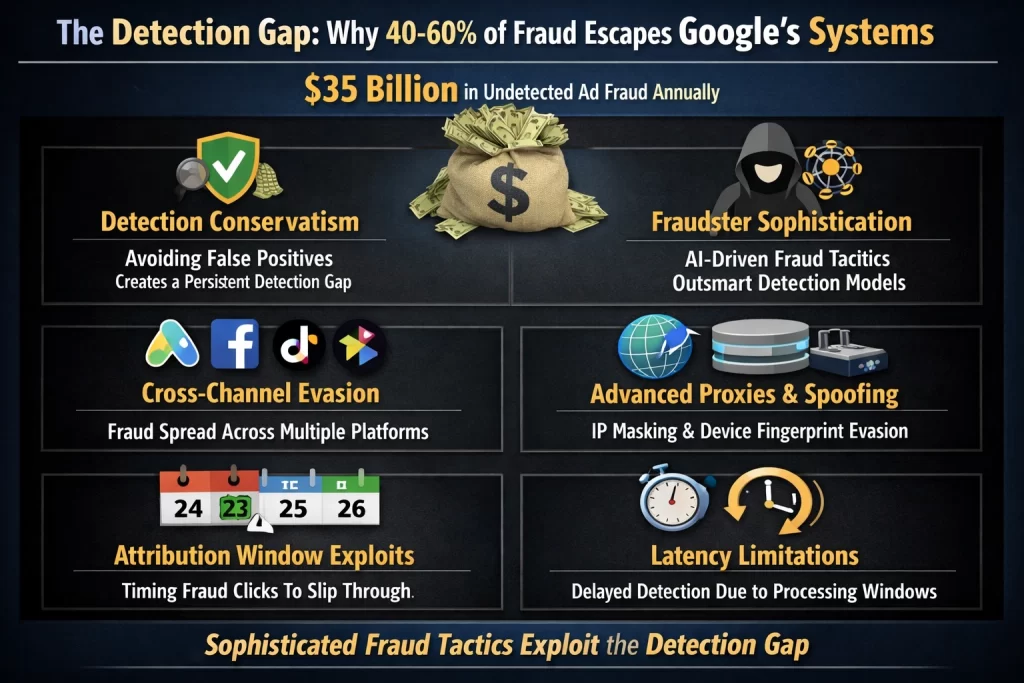

The Detection Gap: Why 40-60% of Fraud Escapes Google's Systems

Despite Google's sophisticated infrastructure, independent research suggests the platform only detects and credits 40-60% of fraudulent clicks, leaving approximately $35 billion in undetected fraud flowing through Google's advertising ecosystem annually[52]. Several factors explain this persistent gap:

Detection Conservatism and False Positive Aversion

Google's detection philosophy prioritizes avoiding false positives—incorrectly flagging legitimate traffic as fraudulent. Advertisers experiencing false flags lose revenue without recourse, creating customer dissatisfaction and potential legal liability. Consequently, Google's systems require very strong evidence before classifying clicks as invalid. Moderately sophisticated fraud introducing sufficient variation to create statistical uncertainty consistently passes detection because Google's conservative threshold demands near-certain proof of fraud. The asymmetry is deliberate: it's "safer" to let some fraud through than risk blocking legitimate traffic[53].

Emerging Fraud Sophistication Outpacing Detection

Fraudsters employ machine learning systems trained to generate behavioral patterns nearly indistinguishable from humans. These AI-generated patterns may be fundamentally different from patterns Google's detection models were trained on, causing them to misclassify as legitimate. The arms race dynamic means detection systems are trained on historical fraud patterns, while fraudsters deploy novel techniques developed after the training data was collected. One-click fraud—where fraudsters execute a single click designed to mimic natural behavior—specifically targets the detection gap by appearing so infrequent it doesn't trigger volume-based anomaly thresholds[54].

Cross-Channel Attribution Evasion

Cross-channel fraud operations deliberately distribute fraudulent signals across multiple platforms to avoid concentrated detection. When fraudulent activity is distributed across Google Ads, Meta, TikTok, and other platforms simultaneously, each platform's detection systems see only a fragment of the fraud. No single platform has sufficient signal concentration to identify coordinated fraud. This "washing" of fraud signals across channels remains largely undetectable by platform-specific systems[55].

Technical Evasion Through Advanced Proxies and Fingerprinting

Residential proxy networks operate at such scale (millions of rotating IPs) that static blocklists become ineffective. Advanced fingerprinting evasion, anti-fingerprinting browsers, and device configuration spoofing defeat the device identification mechanisms Google relies on. When detection systems cannot reliably identify whether traffic originates from a single device (fraud) or multiple devices (legitimate), fraud operating through these channels passes through[56].

Latency and Attribution Window Limitations

Attribution systems necessarily operate within time windows—typically 7-30 days for display ads, 30 days for search clicks. Click injection fraud exploits this by injecting fraudulent clicks at the attribution window boundary. If a user organically installs an app on day 25 and the fraudster injects an attribution click on day 24, the fraudulent click receives credit within the attribution window. The fraud is technically undetectable because the timing appears legitimate according to system rules[57].

Emerging and Future Fraud Trends

State-Sponsored and Enterprise-Targeted Operations

2025-2026 research indicates emergence of state-sponsored click farm operations specifically targeting enterprise advertiser budgets. These operations employ advanced techniques including adaptive machine learning that automatically adjusts fraud methods when detection is encountered. Unlike criminal fraud rings seeking quick profits, state-sponsored actors may seek to sabotage business operations or drain competitive resources more systematically[58].

AI-Powered Adaptive Evasion Systems

The most sophisticated fraud operations now employ machine learning systems that automatically detect when their fraud methods trigger detection systems, then adaptively modify their approach. This real-time adaptive evasion mimics continuous integration/continuous deployment (CI/CD) practices used in legitimate software development—fraudsters deploy fraud, monitor detection response, and automatically update fraud techniques in response. Detection systems based on static rules or training data cannot keep pace with continuously evolving fraud patterns[59].

Deepfake and Synthetic Behavioral Generation

As AI systems improve, fraudsters are generating synthetic user behaviors that are statistically valid but never actually performed by humans. These synthetic patterns, generated through generative adversarial networks or other AI techniques, may pass detection because they technically conform to "legitimate" behavioral distributions, even though they represent artificial traffic[60].

Integrated Omnichannel Fraud Operations

Fraud rings increasingly operate integrated fraud-as-a-service businesses offering comprehensive fraud solutions spanning Google Ads, Meta, TikTok, and programmatic networks. These operations coordinate fraud across channels, making platform-specific detection inadequate. Fraudsters report developing "full or partial solutions to bypass click fraud defenses" as commercial offerings[61].

Financial Losses and Industry-Specific Vulnerabilities

Fraud vulnerability varies dramatically by industry based on CPC value and targeting difficulty:

| Industry/Segment | Estimated Fraud Impact | Primary Attack Vectors | Detection Difficulty |

|---|---|---|---|

| Finance & Legal | 35% of spend | High-CPC targeting, competitor sabotage | Extreme (highest CPC = highest value) |

| Mobile App Marketing | 20-40% of installs | SDK spoofing, click injection, install hijacking | Extreme (attribution system vulnerability) |

| B2B SaaS | 25-30% of spend | Competitor budget draining, competitor IP targeting | High (easy to identify competitors) |

| E-commerce | 15-20% of spend | Affiliate fraud, cookie stuffing | High (diverse traffic sources) |

| Publisher Revenue | High variability | Self-click fraud, purchased bot traffic | Medium (monitored by networks) |

| Display Network | 19% historically | Pixel stuffing, ad stacking, domain spoofing | High (programmatic scale) |

High-cost industries like finance and legal suffer the most damage in absolute dollars because fraud in these verticals commands premium CPCs. A single fraudulent click in legal services might cost $50-100+, while the same click on a consumer product ad costs $0.50. Programmatic and mobile channels represent the highest vulnerability percentages because automated systems at millisecond scale cannot perform fraud detection, and mobile attribution systems inherently trust SDK signals[62][63].

Advertiser Losses: Beyond Direct Budget Drain

The impact of click fraud extends beyond the direct cost of fraudulent clicks:

Campaign Data Corruption

Fraudulent traffic distorts performance metrics, causing advertisers to make suboptimal decisions. A campaign appearing to generate conversions from fraud-contaminated traffic creates false confidence in underperforming audiences. Advertisers continue budget allocation to these sources, compounding losses[64].

Machine Learning Poisoning

Google's Performance Max and Discovery campaigns use machine learning to optimize audience targeting and bid allocation. Fraudulent data poisons the training signals—algorithms learn from fraud, misallocating budgets to channels that appear high-performing but actually consist of worthless invalid traffic[65].

Attribution Misattribution

When fraudulent clicks are interspersed with legitimate traffic, attribution systems incorrectly credit campaigns for conversions they didn't drive. This leads to systematically overestimating ROI on certain channels, encouraging over-investment in fraud-plagued sources[66].

Conversion Rate Suppression

While fraudulent clicks inflate click volume, they don't generate conversions. If a campaign receives 100 fraudulent clicks and 10 legitimate clicks from the same budget, the calculated conversion rate is artificially suppressed (real CVR masked by inflated click denominator), potentially triggering algorithm adjustments that reduce spend on actually-performing campaigns[67].

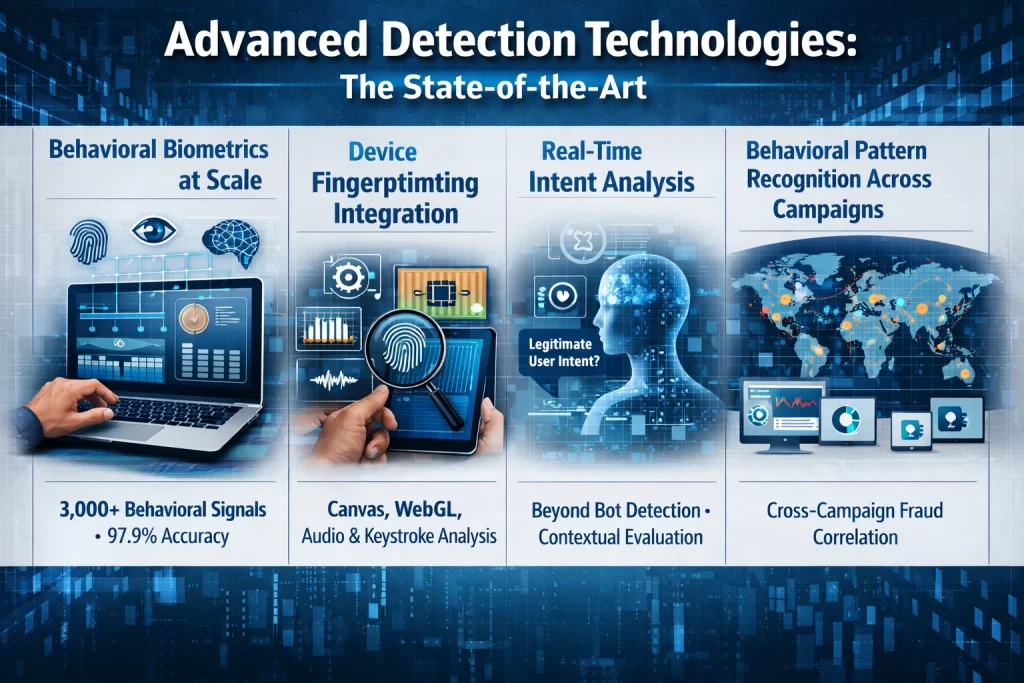

Advanced Detection Technologies: The State-of-the-Art

Leading-edge fraud detection now employs multiple advanced technologies operating in concert:

Behavioral Biometrics at Scale

Advanced systems analyze 3,000+ distinct signals including mouse movement velocity, keystroke dynamics, scroll patterns, hesitation points while reading content, and session timing patterns[71]. Machine learning models establish baselines of legitimate user behavior, then flag deviations. LSTM neural networks (deep learning architectures specifically designed for sequence analysis) achieve 97.9% accuracy in detecting fraudulent behaviors versus 80-88% for traditional machine learning approaches[72].

Device Fingerprinting Integration

Modern detection combines multiple fingerprinting techniques: canvas fingerprinting (rendering text and measuring pixel-level rendering differences), WebGL fingerprinting (GPU and graphics driver characteristics), audio fingerprinting (audio rendering variations), and keystroke dynamics[73]. The combination creates device fingerprints so specific that fraudsters would need millions of unique devices to effectively spoof signatures, making fraud economically unviable at scale.

Real-Time Intent Analysis

Advanced AI systems now focus on user intent rather than binary human/bot classification. The question shifts from "Is this a real person?" to "Does this person's behavior suggest legitimate interest in the advertised offering?" This intent-based approach is more robust to behavioral mimicry because it evaluates whether the overall interaction pattern makes sense—for example, fraudulent traffic typically doesn't engage with actual conversion flows or complete meaningful transactions[74].

Behavioral Pattern Recognition Across Campaigns

Cross-campaign analysis identifies fraud operations targeting multiple campaigns simultaneously with coordinated patterns. Fraud that appears isolated in a single campaign reveals itself when viewed across the advertiser's entire account—specific IP ranges, devices, or behavioral patterns repeated across campaigns indicate coordinated fraud rather than random invalid traffic[75].

Recommendations for Advertisers and the Industry

For Individual Advertisers

- Implement Third-Party Fraud Detection: Do not rely solely on Google's protections. Supplementary third-party tools employing independent detection algorithms can identify fraud that Google's systems miss, protecting 10-15% of budget that Google doesn't detect[76].

- Establish Conversion Quality Signals: Rather than optimizing for clicks, require multi-step conversion signals (account activation, meaningful engagement, deposit intent for financial products) before counting traffic as valuable. Click farms complete surface actions but rarely follow through to conversion[77].

- Monitor Fraud Indicators Directly: Track click-to-engagement conversion flow. A source generating 1,000 clicks but zero engagement (no page scroll, no form interaction, immediate bounces) is likely fraudulent regardless of Google's classification[78].

- Implement Fraud Scoring Across IPs: Maintain internal denylist of IPs producing consistent zero-conversion clicks. Even legitimate-appearing IPs from residential proxies can be identified through zero-engagement patterns.

- Segment Performance by Traffic Source: Don't allow aggregated metrics to mask fraud. Compare performance across geographic regions, device types, and time periods. Fraud is often localized to specific combinations—for example, fraud might concentrate in off-hours or specific geographies, revealing itself only when segmented[79].

For the Industry

- Demand Granular Reporting: Advertisers should collectively push platforms for detailed invalid click reporting at placement, source, and temporal levels. The opacity currently protecting platforms from liability should not persist—advertisers paying for traffic deserve forensic visibility.

- Develop Cross-Platform Attribution Standards: The rise of cross-channel fraud requires industry coordination on attribution and fraud detection standards. Individual platforms cannot solve coordination problems that span multiple platforms.

- Invest in Behavioral Biometrics: The industry should standardize behavioral biometric data collection (mouse movement, keystroke dynamics, scroll patterns) as core fraud detection infrastructure. These signals are inherently difficult to spoof at scale.

- Establish Fraud Rate Benchmarking: Industry consortium development of fraud rate benchmarks would allow advertisers to identify whether their fraud exposure is normal, elevated, or unusually low, creating pressure for platforms to improve detection toward benchmarks.

Conclusion

Click farm fraud represents a sophisticated, continuously evolving threat costing the digital advertising industry over $100 billion annually. While Google maintains the most advanced fraud detection infrastructure in the industry—including 200+ automated filters, AI-powered large language models, behavioral biometrics, and dedicated human review teams—the persistent reality is that approximately 40-60% of fraud escapes detection, with $35 billion annually flowing through Google's platforms undetected and uncredited.

Fraudsters have systematized deception into a scalable business model, combining human workers with bots, malware networks, and AI-powered behavioral mimicry systems. They deploy sophisticated IP evasion through residential proxies, defeat device fingerprinting through anti-fingerprinting browsers and device rotation, and wash fraud signals across multiple platforms to evade detection. The most advanced operations employ machine learning that adaptively modifies fraud techniques when detection is encountered, creating an arms race where detection systems built on historical fraud patterns struggle to identify novel techniques developed after training data collection.

Google's defenses continue evolving, particularly with 2025-2026 deployments of large language models achieving 40% reduction in deceptive ad serving fraud. Yet the fundamental limitation persists: detection conservatism to avoid false positives, combined with emerging fraud sophistication that outpaces detection model updates, means a substantial portion of fraud remains invisible to platform systems.

For advertisers, the implication is clear: platform protections are necessary but insufficient. Supplementary third-party fraud detection, conversion quality signal monitoring, performance segmentation, and behavioral analysis remain essential to protect advertising budgets. For the industry, greater transparency, cross-platform coordination, and standardized behavioral biometric approaches represent the path toward reducing the $100+ billion annual fraud drain that distorts advertiser ROI and erodes trust in digital advertising as an effective customer acquisition channel.

References (APA Format)

[1] Clickfortify. (2025, December 31). Click fraud statistics 2026: Comprehensive report 2027. Retrieved from https://www.clickfortify.com/blog/click-fraud-statistics-2026-comprehensive-report

[2] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[6] Clickfortify. (2025, December 31). Click fraud statistics 2026: Comprehensive report. Retrieved from https://www.clickfortify.com/blog/click-fraud-statistics-2026-comprehensive-report

[7] Clicksambo. (2025, December 10). PPC fraud protection in 2026: New threats & strategies. Retrieved from https://clicksambo.com/blog-detail/ppc-fraud-protection-2026

[8] Passion Digital. (2025, September 29). Invalid traffic: The hidden threat draining your Google Ads budget. Retrieved from https://passion.digital/blog/the-hidden-threat-draining-your-google-ads-budget-how-to-detect-and-prevent-invalid-traffic/

[9] Clicksambo. (2025, December 10). PPC fraud protection in 2026: New threats & strategies. Retrieved from https://clicksambo.com/blog-detail/ppc-fraud-protection-2026

[10] TrafficGuard. (2024, March 31). Click fraud statistics 2026: Global costs & key trends. Retrieved from https://www.trafficguard.ai/click-fraud-statistics

[11] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[12] Fraudlogix. (2025, December 22). Click farms: What they are & how to stop them. Retrieved from https://www.fraudlogix.com/glossary/what-are-click-farms-and-how-to-stop-them/

[13] Fraudlogix. (2025, December 22). Click farms: What they are & how to stop them. Retrieved from https://www.fraudlogix.com/glossary/what-are-click-farms-and-how-to-stop-them/

[14] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[15] Potanin, A. (2025, March 5). Click bombing 2025: Defending against fraudulent ad clicks. Retrieved from http://andypotanin.com/click-bombing-2025/

[16] Fraudlogix. (2025, December 22). Residential proxy: Detection evasion & fraud prevention. Retrieved from https://www.fraudlogix.com/glossary/what-is-a-residential-proxy/

[17] Hitprobe. (2025, July 6). VPNs & proxies: Friend, foe, or false alarm for click fraud? Retrieved from https://hitprobe.com/blog/vpns-proxies

[18] Hitprobe. (2025, July 6). VPNs & proxies: Friend, foe, or false alarm for click fraud? Retrieved from https://hitprobe.com/blog/vpns-proxies

[19] Clickfortify. (2025, December 31). What is click fraud? Complete guide. Retrieved from https://www.clickfortify.com/blog/what-is-click-fraud-complete-guide

[20] HumanSecurity. (2026, January 11). Understanding click fraud tactics: Advanced bots and click farms. Retrieved from https://www.humansecurity.com/learn/blog/click-fraud-bots-click-farms/

[21] DataDome. (2026, January 14). How AI is used in fraud detection in 2025. Retrieved from https://datadome.co/learning-center/ai-fraud-detection/

[22] GetFocal. (2025, June 2). What is device fingerprinting? How does it fight fraud? Retrieved from https://www.getfocal.ai/knowledgebase/what-is-device-fingerprinting

[23] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[24] Clickfortify. (2025, December 31). What is click fraud? Complete guide. Retrieved from https://www.clickfortify.com/blog/what-is-click-fraud-complete-guide

[25] Potanin, A. (2025, March 5). Click bombing 2025: Defending against fraudulent ad clicks. Retrieved from http://andypotanin.com/click-bombing-2025/

[26] DataDome. (2026, January 14). How AI is used in fraud detection in 2025. Retrieved from https://datadome.co/learning-center/ai-fraud-detection/

[27] ClickCease. (2023, February 1). Spotting SDK spoofing & mobile ad fraud. Retrieved from https://www.clickcease.com/blog/sdk-spoofing-mobile-ad-fraud/

[28] ClickCease. (2023, February 1). Spotting SDK spoofing & mobile ad fraud. Retrieved from https://www.clickcease.com/blog/sdk-spoofing-mobile-ad-fraud/

[29] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[30] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[31] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[32] Fraudlogix. (2025, January 9). Pixel stuffing & cookie stuffing | Ad & affiliate fraud 101. Retrieved from https://www.fraudlogix.com/blog/ad-fraud-101-understanding-the-differences-between-pixel-stuffing-and-cookie-stuffing/

[33] Mailchimp. (2024, December 16). How to detect and prevent click fraud. Retrieved from https://mailchimp.com/resources/click-fraud/

[34] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[35] Fraudlogix. (2025, January 9). Pixel stuffing & cookie stuffing | Ad & affiliate fraud 101. Retrieved from https://www.fraudlogix.com/blog/ad-fraud-101-understanding-the-differences-between-pixel-stuffing-and-cookie-stuffing/

[36] Clicksambo. (2025, December 10). PPC fraud protection in 2026: New threats & strategies. Retrieved from https://clicksambo.com/blog-detail/ppc-fraud-protection-2026

[37] Potanin, A. (2025, March 5). Click bombing 2025: Defending against fraudulent ad clicks. Retrieved from http://andypotanin.com/click-bombing-2025/

[38] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[39] Google. (n.d.). How we prevent invalid traffic - Ad Traffic Quality. Retrieved from https://www.google.com/ads/adtrafficquality/how-we-prevent-it/

[40] Google. (n.d.). How we prevent invalid traffic - Ad Traffic Quality. Retrieved from https://www.google.com/ads/adtrafficquality/how-we-prevent-it/

[41] Google Support. (2026, January 6). Managing invalid traffic - Google Ads Help. Retrieved from https://support.google.com/google-ads/answer/11182074?hl=en

[42] Google. (n.d.). How we prevent invalid traffic - Ad Traffic Quality. Retrieved from https://www.google.com/ads/adtrafficquality/how-we-prevent-it/

[43] Google Blog. (2025, August 11). How we're using AI in new ways to fight invalid traffic. Retrieved from https://blog.google/products/ads-commerce/using-ai-to-fight-invalid-ad-traffic/

[44] Google Blog. (2025, August 11). How we're using AI in new ways to fight invalid traffic. Retrieved from https://blog.google/products/ads-commerce/using-ai-to-fight-invalid-ad-traffic/

[45] Clickfortify. (2025, December 31). Google Ads bot traffic protection: Ultimate guide. Retrieved from https://www.clickfortify.com/blog/bot-traffic-protection-google-ads-campaigns

[46] Google. (n.d.). How we prevent invalid traffic - Ad Traffic Quality. Retrieved from https://www.google.com/ads/adtrafficquality/how-we-prevent-it/

[47] Google. (n.d.). How we prevent invalid traffic - Ad Traffic Quality. Retrieved from https://www.google.com/ads/adtrafficquality/how-we-prevent-it/

[48] Google Support. (2026, January 6). Managing invalid traffic - Google Ads Help. Retrieved from https://support.google.com/google-ads/answer/11182074?hl=en

[49] Google Support. (2026, January 6). Managing invalid traffic - Google Ads Help. Retrieved from https://support.google.com/google-ads/answer/11182074?hl=en

[50] Passion Digital. (2025, September 29). Invalid traffic: The hidden threat draining your Google Ads budget. Retrieved from https://passion.digital/blog/the-hidden-threat-draining-your-google-ads-budget-how-to-detect-and-prevent-invalid-traffic/

[51] Passion Digital. (2025, September 29). Invalid traffic: The hidden threat draining your Google Ads budget. Retrieved from https://passion.digital/blog/the-hidden-threat-draining-your-google-ads-budget-how-to-detect-and-prevent-invalid-traffic/

[52] Clickfortify. (2025, December 31). Click fraud statistics 2026: Comprehensive report. Retrieved from https://www.clickfortify.com/blog/click-fraud-statistics-2026-comprehensive-report

[53] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[54] Integral Ads. (2025, May 16). What is click fraud: Cost & prevention. Retrieved from https://integralads.com/insider/what-is-click-fraud/

[55] Clicksambo. (2025, December 10). PPC fraud protection in 2026: New threats & strategies. Retrieved from https://clicksambo.com/blog-detail/ppc-fraud-protection-2026

[56] SPUR. (2026, January 20). What is a residential proxy? Definition, risks & detection. Retrieved from https://spur.us/what-is-a-residential-proxy/

[57] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[58] Clicksambo. (2025, December 10). PPC fraud protection in 2026: New threats & strategies. Retrieved from https://clicksambo.com/blog-detail/ppc-fraud-protection-2026

[59] Potanin, A. (2025, March 5). Click bombing 2025: Defending against fraudulent ad clicks. Retrieved from http://andypotanin.com/click-bombing-2025/

[60] DataDome. (2026, January 14). How AI is used in fraud detection in 2025. Retrieved from https://datadome.co/learning-center/ai-fraud-detection/

[61] HumanSecurity. (2026, January 11). Understanding click fraud tactics: Advanced bots and click farms. Retrieved from https://www.humansecurity.com/learn/blog/click-fraud-bots-click-farms/

[62] Clicksambo. (2025, December 10). PPC fraud protection in 2026: New threats & strategies. Retrieved from https://clicksambo.com/blog-detail/ppc-fraud-protection-2026

[63] Passion Digital. (2025, September 29). Invalid traffic: The hidden threat draining your Google Ads budget. Retrieved from https://passion.digital/blog/the-hidden-threat-draining-your-google-ads-budget-how-to-detect-and-prevent-invalid-traffic/

[64] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[65] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[66] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[67] Mailchimp. (2024, December 16). How to detect and prevent click fraud. Retrieved from https://mailchimp.com/resources/click-fraud/

[71] Facephi. (2025, September 2). Behavioural biometrics for fraud prevention. Retrieved from https://facephi.com/en/behavioural-biometrics-fraud/

[72] Journal AJRCOS. (2025, April 7). AI-powered behavioural biometrics for fraud detection in digital banking: A next-generation approach to financial cybersecurity. Retrieved from https://journalajrcos.com/index.php/AJRCOS/article/view/632

[73] Stytch. (2022, December 31). Implementing fraud detection techniques for the AI era. Retrieved from https://stytch.com/blog/browser-fingerprinting/

[74] DataDome. (2026, January 14). How AI is used in fraud detection in 2025. Retrieved from https://datadome.co/learning-center/ai-fraud-detection/

[75] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[76] Passion Digital. (2025, September 29). Invalid traffic: The hidden threat draining your Google Ads budget. Retrieved from https://passion.digital/blog/the-hidden-threat-draining-your-google-ads-budget-how-to-detect-and-prevent-invalid-traffic/

[77] Fraudlogix. (2025, December 22). Click farms: What they are & how to stop them. Retrieved from https://www.fraudlogix.com/glossary/what-are-click-farms-and-how-to-stop-them/

[78] Clickfortify. (2025, December 31). The complete guide to Google Ads fraud. Retrieved from https://www.clickfortify.com/blog/complete-guide-google-ads-fraud

[79] Passion Digital. (2025, September 29). Invalid traffic: The hidden threat draining your Google Ads budget. Retrieved from https://passion.digital/blog/the-hidden-threat-draining-your-google-ads-budget-how-to-detect-and-prevent-invalid-traffic/

Related Articles

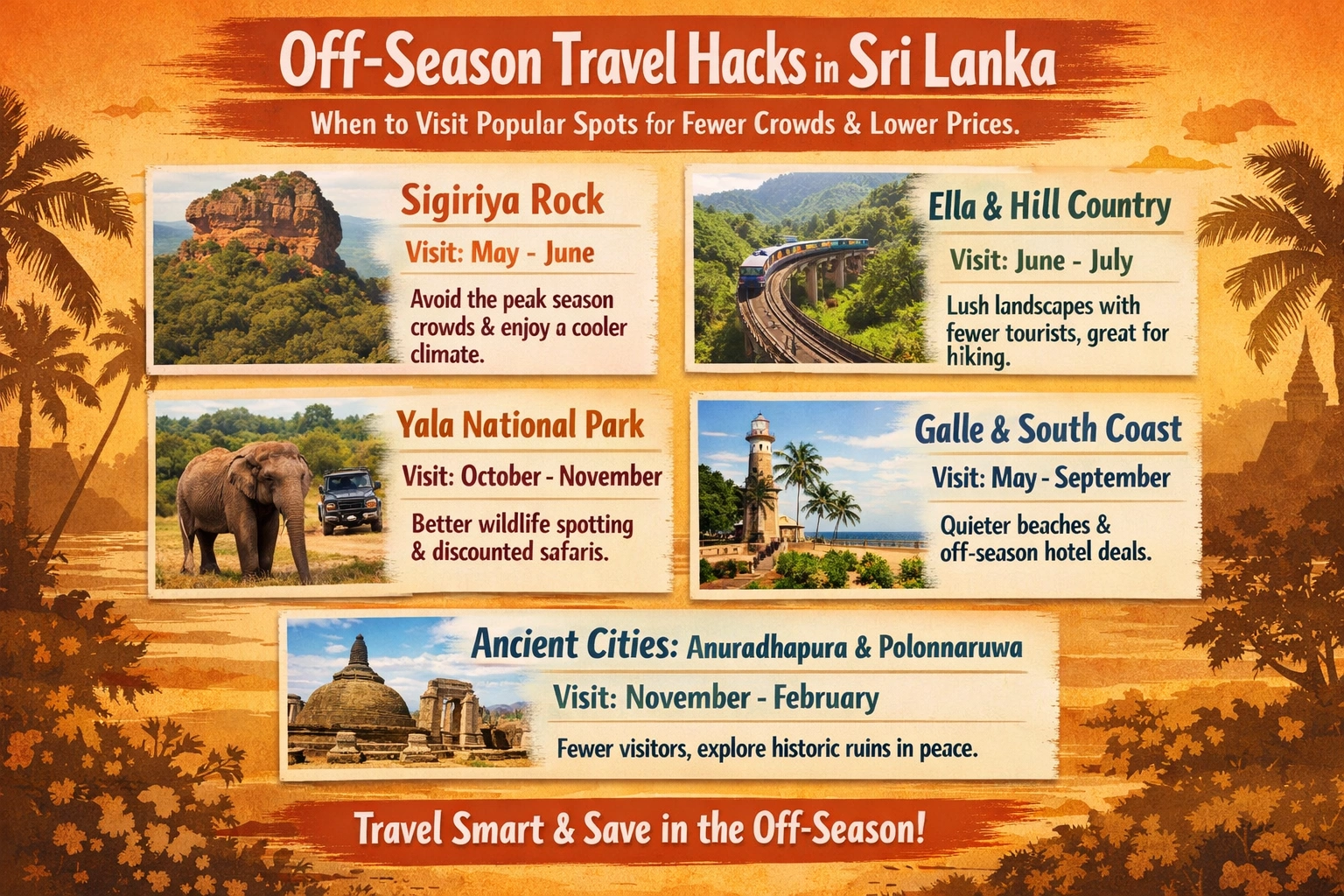

Off‑season travel hacks in Sri Lanka: When to visit popular spots for fewer crowds and lower prices.

Why off-season Sri Lanka is a smart move Planning Sri Lanka in the so‑called “low” or off‑season is one of the easiest ways to unlock a quieter, cheaper and more authentic version of the island, without sacrificing the core experiences that make it special. With smart timing and a bit of flexibili...

Best invoicing and accounting software for freelancers and small agencies - In-Depth Guide

Introduction to Invoicing and Accounting Software for Freelancers and Small Agencies Freelancers and small agencies often juggle multiple clients, projects, and deadlines, making efficient financial management essential. Invoicing and accounting software streamlines these tasks by addressing core n...